Tiancheng (Kevin) Sun

孙天成

I am a senior research scientist at Google, working on Google Beam. I received my Ph.D. in Computer Science (Google Ph.D. Fellow) at University of California, San Diego under the advisory of Prof. Ravi Ramamoorthi. I did my undergraduate in Yao Class, Tsinghua University.

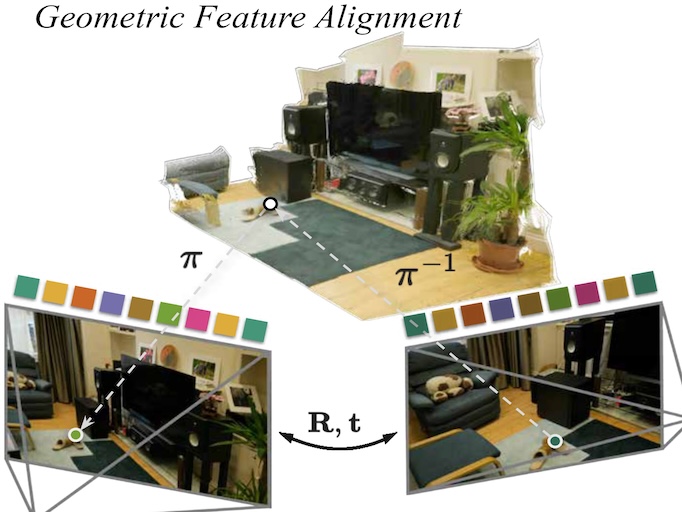

I am generally interested in computer vision and graphics, with a particular focus on novel view synthesis and 3D reconstruction. Recently, I am more focused on 3D continuous learning in time.

Publications

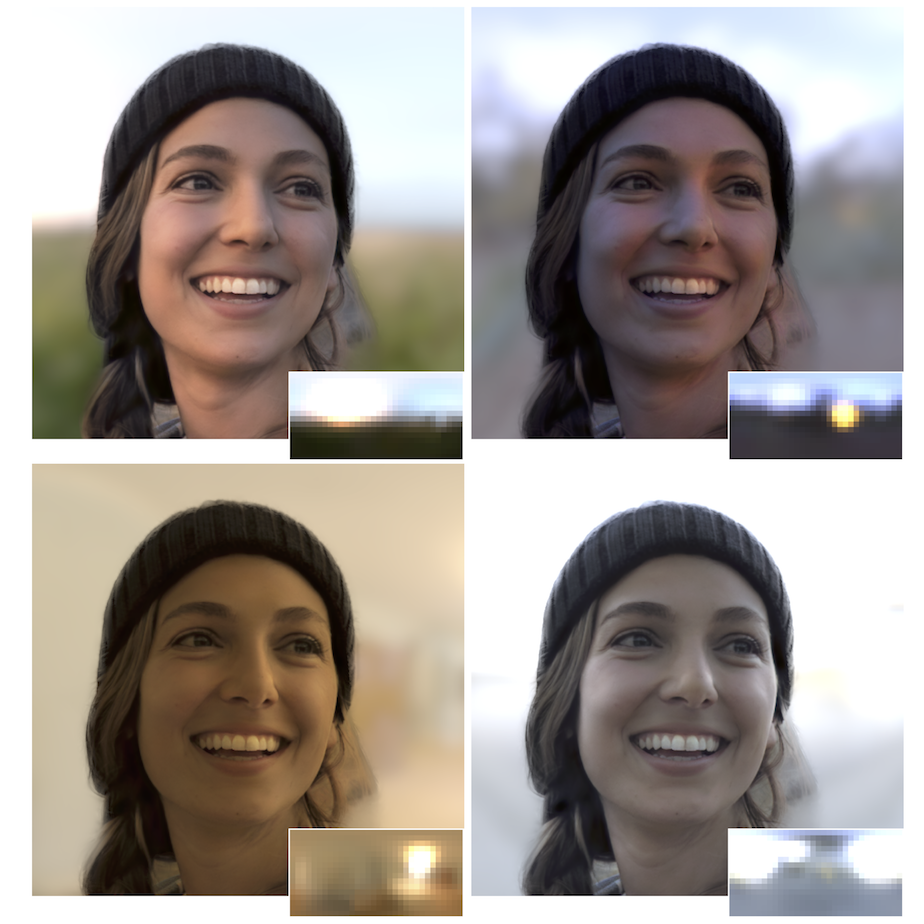

Quark: Real-time, High-resolution, and General Neural View Synthesis

John Flynn*, Michael Broxton*, Lukas Murmann*, Lucy Chai, Matthew DuVall, Clément Godard, Kathryn Heal, Srinivas Kaza, Stephen Lombardi, Xuan Luo, Supreeth Achar, Kira Prabhu, Tiancheng Sun, Lynn Tsai, Ryan Overbeck

SIGGRAPH Asia 2024

Awards & Honors

- 2024 — Best Paper Award, SIGGRAPH Asia

- 2019 — Google PhD Fellowship

Professional Services

- Technical Program Committee, SIGGRAPH 2026

- Reviewer, SIGGRAPH/SIGGRAPH Asia

- Reviewer, CVPR/ICCV/ECCV